Test of different network architectures

According to assumptions, the database is chosen (CASIA-WebFace), input image is preprocessed (112x96, MTCNN), only mirror used as Data Augmentation and for learning the CrossEntropy loss will be used. The last lacking element in the pipeline is network architecture. Last years was really abounded in many diverse ideas about creating the architectures like ResNet, Inception or DenseNet. Additional, the community of Face Recognition was also introducing their own architectures like FaceResNet, SphereNet,LightCNN or FudanNet. Currently we will look closer to the later one as we know their performance and low computation requirements.

We will also include some older architectures to see if it is really true then the new ones works much better than architectures from 2014 or earlier, we choose LeNet, DeepID, DeepID2+ and CASIA.

This is not the final choice of the architecture, we just want to get a reasonable baseline, which will accompany us with all others test. There are so many test, because we want to make sure, that my current pipeline works well and if my implementation match results from papers.

Description of Architectures

LeNet - the most popular convolutional architecture. Input image: 28x24.

DeepID - One of the first specialized networks used for Face Recognition. Comparing to LeNet, it have more filters and final feature comes from merging data from two layers. Input image: 42x36.

DeepID2+ - Extension of DeepID, have much more number of filters and features size is now 512. Input image:56x48.

Casia-Net - Architecture proposed after success of VGG and GoogLeNet. It use concept of kernel 3x3 and Average Pooling.

Light-CNN- The author propose to use MFM as a activation function, which is extension of MaxOut. In his experiments it is better than ReLU, ELU or even PReLU.

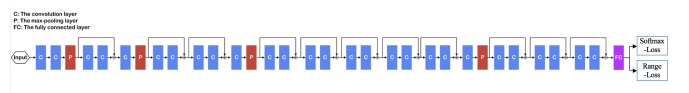

FaceResNet - Architecture proposed by author of CenterLoss and RangeLoss, which use residual connection, like in ResNet. But it does not use BatchNorm and replaces the Relu activation functions with the PRELu functions.

SphereFace - New version of FaceResNet which mainly replace each MaxPool by Convolution with stride equal to 2.

Fudan-Arch -Idea of FaceResNet but with Batchnorm.

Most of the above architecture have also DropOut inside, other have own regularization method. If we just want to replicate the results as stated at paper, we would still not be able to compare such results to each other because of different settings. This is why we will completely ignore any special regularization method (like CenterLoss) and here will be two experiments for each architecture: with and without DropOut. This would also help to validate the current implementation with the results from papers.

To evaluate each network we will use its qualitative results (accuracy and loss value) and time-to-score. The model of each architect was chosen based on the model with the lowest validation loss, ie the result of the LFW did not affect the choice of the model, even though the models achieved better results in another epoch.

Results

CASIA Training and LFW

First of all, let's look closer to architectures with DropOut. There is not clean winner here, Fudan-Full, SphereFace64 and Light-CNN29 are overall comparable, but each of them dominate at one of the given benchmark (validation loss, LFW, BLUFR). Very close to them in FaceResNet, which was training much faster. It is very interesting that many network achieve > 97% at LFW, however BLUFR protocols show us the real difference in quality. For example, difference in 0.7% at LFW between CASIA and SphereFace64 translate to 16% in BLUFR-FAR 1%.

What about architectures without any regularization? Here the clear winner is Fudan-Full, followed by SphereFace64 and FaceResNet. From the intuition, it look like that BatchNorm at Fudan-Full helped at lot as it behaves like a regularizator. From such comparison we can also deduce which architecture is good for testing any new Data Augmentation technique or new loss, because it would show us even small gain. In our case it is Light-CNN29 which overfit a lot.

For detailed analyse we choose best models: FaceResNet, Light-CNN29, SphereFace64 and FudanFull.

IJB-A

Overall here Light-CNN29 is the winner, which lose at only Rank-1 benchmark. But SphereFace64 is breathing down its neck by being just slightly worse. The results from FundanFull are really bad, not sure what is the reason for that.

Mega Face

In MageFace, identification protocol is winner by FudanFull while verification protocol is taken by Light-CNN29 (where FudanFull is again the weakest)

Baseline Model

Summarizing, if we want to choose best architecture among tested, the Light-CNN29 would be the best with Sphere64 just right behind. FudanFull works nice, but in some scenario its accuracy is too low. This is our podium. Looking closer to the this architectures, the common thing is using residual connection. But they vary at activation function, using Pool vs Convolution with stride equal 2 and using BatchNorm. So maybe they are not best possible architectures? We will leave this question for future tests.

When we compare the results from current implementation with the results from paper, most of them matched target accuracy. The only exception is SphereFace, which without DropOut overfit, although the original version does not have it.

When we compare the time needed for getting best results, this is definitely the best place for FaceResNet, which is only slightly weaker than the best model, but it learned almost 3x shorter. This is why FaceResNet is chosen as baseline architecture. He will accompany us throughout the series named Face Recognition. Specifically, both FaceResNet will be used, depending of scenario: when we will be reducing overfiting by new technique, we will use raw architecture, in other case DropOut will be used.

What next?

Looking into the results it look like that getting ~98% on LFW using only basic technique for learning is easy. This results would be among the best 3 years ago, but currently it is ~1.5% behind state-of-the-art. In MegaFace in even worse, because our results is 20% lower using same dataset.

How we can boost accuracy of our model? A lot of researcher propose their own technique, but will they work in our case? What boost can we gain? We will learn this in the next post, and in the near future we will look at the aspect of noise in the learning data.

References

- Gradient-Based Learning Applied to Document Recognition

- Deep Learning Face Representation from Predicting 10,000 Classes

- Deeply learned face representations are sparse, selective, and robust

- Learning Face Representation from Scratch

- A Light CNN for Deep Face Representation with Noisy Labels

- Range Loss for Deep Face Recognition with Long-tail

- SphereFace: Deep Hypersphere Embedding for Face Recognition

- Multi-task Deep Neural Network for Joint Face Recognition and Facial Aribute Prediction

- Dropout: A Simple Way to Prevent Neural Networks from Overfitting